Difference between revisions of "XRootD Buffer"

| (13 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

=UNDER CONSTRUCTION= | =UNDER CONSTRUCTION= | ||

=General Design= | =General Design= | ||

| + | |||

| + | Note, the definitive source of data rate/volume numbers is in {{Doc|1086}}. The extracted numbers below may become out of date. | ||

| + | |||

* A few bits of info: | * A few bits of info: | ||

** 230MB per readout (all 6 APAs, 5ms window) | ** 230MB per readout (all 6 APAs, 5ms window) | ||

| + | ** Lossless compression: 4 | ||

** File size will determine the number of parallel writes and vice versa | ** File size will determine the number of parallel writes and vice versa | ||

| − | ** Nominal "max top instantaneous rate" | + | ** Nominal "max top instantaneous rate": 3GB/s |

** Nominal spill is 4.5s | ** Nominal spill is 4.5s | ||

| − | ** Nominal data in spill 13.5GB | + | ** Spill cycle: 22.5s |

| − | ** Nominal data out of spill 13.5GB | + | ** Nominal data in spill: 13.5GB |

| + | ** Nominal data out of spill: 13.5GB | ||

| + | ** Average sustained: 1.2GB/s | ||

* A proposal for the online buffer:{{DuneDocDB|1628|DUNE DocDB 1628}} | * A proposal for the online buffer:{{DuneDocDB|1628|DUNE DocDB 1628}} | ||

| Line 15: | Line 21: | ||

* format and content of the message can be configured by the developer | * format and content of the message can be configured by the developer | ||

* considerations for blocking of xrootd by the reader of the FIFO | * considerations for blocking of xrootd by the reader of the FIFO | ||

| + | ** xrootd will buffer a specified number of messages to be sent to the recipient; once the limit is reached, messages are dropped and a record is made in the log file | ||

| + | ** depth of the Linux FIFO depends on the distro; the value encountered most often is 64kB | ||

| + | |||

| + | ==Required Functionality== | ||

| + | |||

| + | * Poll FIFO file descriptor for waiting data. | ||

| + | * Parse message | ||

| + | * Record message in persistent storage (eg, a database of some shape) | ||

| + | * Promptly notify tasks that must handle message | ||

| + | * Return to Polling ASAP | ||

| + | * Multiple handlers of messages: | ||

| + | ** notifying F-FTS when both DAQ data file and metadata file are ready for reading | ||

| + | ** possibly run metadata file producer (unless DAQ handles this) | ||

| + | ** notifying shift of errors via run control | ||

| + | ** notifying experts via various (email, SMS) | ||

| + | * Must have recovery mode to replay notification based on some criteria | ||

| + | |||

| + | = High Level Design Options = | ||

| + | |||

| + | '''Note, these are just strawman sketches.''' | ||

| + | |||

| + | Below are a few high level design options for how the buffer system will work internally. Its subsections are ordered roughly in increasing complexity. | ||

| + | |||

| + | == Direct FIFO Read == | ||

| + | |||

| + | [[File:Buffer-system-direct-fifo-read.svg|center|800px]] | ||

| + | |||

| + | Basics: | ||

| + | |||

| + | * An F-FTS instance resides on each storage node. | ||

| + | * F-FTS is modified to directly read the XRootD notification FIFO. | ||

| + | * Each F-FTS responds to '''close''' events by initiating transfers to EOS. | ||

| + | * Transfer can be "xrdcp --tpc" to redirector for server-to-server XRootD to EOS or it can be a copy that F-FTS does explicitly from file-system to EOS. | ||

| + | |||

| + | Questions: | ||

| + | |||

| + | * How does the metadata file producer get run on the storage nodes under this scenario? | ||

| + | * How do synchronize data+metadata files? | ||

| + | * How do we recover/replay? | ||

| + | * What do we provide for monitoring? | ||

| + | |||

| + | Possible issues: | ||

| + | |||

| + | * The only record of actions on the buffer will be XRootD and F-FTS logs. Understanding lost files or other problems require "grep-ology". | ||

| + | |||

| + | == Mediated File System Poll with Metadata Production == | ||

| + | |||

| + | [[File:Buffer-system-filesystem.svg|center|800px]] | ||

| + | |||

| + | * An F-FTS instance resides on each storage node. | ||

| + | * A "notifier" process is developed which runs on each storage node. | ||

| + | * It handles XRootD '''close''' of a DAQ data file. | ||

| + | * It runs the metadata file producer | ||

| + | * It creates the data+metadata files in the F-FTS dropbox (eg with atomic <tt>mv</tt> or <tt>ln</tt>) | ||

| + | * It can produce compact logs or even DB entries for its actions. | ||

| + | |||

| + | Questions: | ||

| + | |||

| + | * What about if the DAQ produces the metadata file? It will land on a random XRootD storage node. Depending on DAQ, it will precede or follow the data file. How will F-FTS learn of it and synchronize the two? | ||

| + | |||

| + | Possible issues: | ||

| + | |||

| + | == Mediated Notify with optional Metadata Production == | ||

| + | |||

| + | [[File:Buffer-system-notify.svg|center|800px]] | ||

| + | |||

| + | * A single F-FTS instance is installed | ||

| + | * A "notifier" process is developed which runs on each storage node. | ||

| + | * It handles XRootD '''close''' of a DAQ data file. | ||

| + | * It (optionally) runs the metadata file producer | ||

| + | * It notifies F-FTS via HTTP POST | ||

| + | * It can produce compact logs or even DB entries for its actions. | ||

| + | |||

| + | Questions: | ||

| + | |||

| + | * As above, what if DAQ produces the metadata file? How to sync the pair? | ||

| + | |||

| + | Possible issues: | ||

| + | |||

| + | == Complex Bespoked == | ||

| + | |||

| + | [[File:Buffer-system-bespoked.svg|center|800px]] | ||

| + | |||

| + | This version provides a high degree of monitoring and control but at the expense of complexity and somewhat reinventing wheels already in FTS. | ||

| + | The figure provides a cartoon of the actors involved in buffering the DAQ data files and associated metadata files. | ||

| + | |||

| + | |||

| + | Explanation: | ||

| + | |||

| + | * Multiple DAQ Event Builder (EB) nodes write to XRootD | ||

| + | * XRootD Redirector sends transfers to specific XRootD on specific storage node (not shown) | ||

| + | * XRootD Redirector writes [[NP04/XRootD Buffer Notification Message Format|message]] to FIFO on state changes | ||

| + | * Handler <code>poll()</code> to get notified of FIFO ready to read | ||

| + | * Handler records message in [[NP04/XRootD Buffer Database|local DB]] and forwards to Process Dispatcher and returns to <code>poll()</code> ASAP. Internally this may involve message dispatch to threaded workers over thread safe message queue. | ||

| + | * Process Dispatcher actually handles the message by running a number of processes for each message. A map of message type to processes is part of its configuration. | ||

| + | * Notifier is responsible to tell FTS via HTTP POST about files being ready to transfer and recording this act in to the DB. The notification largely consists of the XRootD URL of the files to transfer. Note: this is not a persistent HTTP query; the response says nothing about the success of the transfer (check if this is true). | ||

| + | * If the buffer nodes are made responsible for producing the metadata file, we start this process. Note, this design currently does not take into account how to run this process on the storage node hosting the DAQ data file. | ||

| + | * The Recovery process is a command line program that can query SAM and the local DB to determine what files entered the buffer but never made it to EOS. It can also resend messages to the Process Dispatcher to replay the actions to attempt to reprocess the messages. | ||

| + | * The local DB is accessed by agents via SQLAlchemy using a shared code base for the ORM models. A central table holds info about each incoming XRootD message and associated tables hold results of processing the message by the various other agents, including replays. | ||

| + | |||

| + | Options: | ||

| + | |||

| + | The Process Dispatcher can be responsible for writing message to DB. | ||

| + | |||

| + | |||

| + | The various agents shown here may be implemented as separate processes or amalgamated to some extent. Eg, Process Dispatcher may absorb Handler and Notifier, but probably should keep Metadata File Producer and Recovery as external. The main point is that the each "bubble" in the drawing should act asynchronously from the others and not block. Eg, the handler should give the ProcessDispatcher notification and that should return immediately. | ||

| − | == | + | =Appendix= |

| − | * | + | ==Misc Examples and Links== |

| − | * | + | * [http://iopscience.iop.org/article/10.1088/1742-6596/396/4/042001/meta CHEP12 article: ALICE Tier-2 XRootD] |

| − | * | + | * [http://iopscience.iop.org/article/10.1088/1742-6596/513/3/032025/pdf CHEP13 article: Quantifying XRootD Scalability and Overheads] |

| + | * [http://aliceinfo.cern.ch/Public/en/Chapter2/Chap2_DAQ.html ALICE DAQ] | ||

Latest revision as of 17:12, 30 November 2017

Contents

UNDER CONSTRUCTION

General Design

Note, the definitive source of data rate/volume numbers is in DUNE DocDB #1086. The extracted numbers below may become out of date.

- A few bits of info:

- 230MB per readout (all 6 APAs, 5ms window)

- Lossless compression: 4

- File size will determine the number of parallel writes and vice versa

- Nominal "max top instantaneous rate": 3GB/s

- Nominal spill is 4.5s

- Spill cycle: 22.5s

- Nominal data in spill: 13.5GB

- Nominal data out of spill: 13.5GB

- Average sustained: 1.2GB/s

- A proposal for the online buffer:DUNE DocDB 1628

XROOTD-FTS Interface

Design Considerations

- ofs.notify can be set to send ASCII messages to a FIFO or a user app/script

- format and content of the message can be configured by the developer

- considerations for blocking of xrootd by the reader of the FIFO

- xrootd will buffer a specified number of messages to be sent to the recipient; once the limit is reached, messages are dropped and a record is made in the log file

- depth of the Linux FIFO depends on the distro; the value encountered most often is 64kB

Required Functionality

- Poll FIFO file descriptor for waiting data.

- Parse message

- Record message in persistent storage (eg, a database of some shape)

- Promptly notify tasks that must handle message

- Return to Polling ASAP

- Multiple handlers of messages:

- notifying F-FTS when both DAQ data file and metadata file are ready for reading

- possibly run metadata file producer (unless DAQ handles this)

- notifying shift of errors via run control

- notifying experts via various (email, SMS)

- Must have recovery mode to replay notification based on some criteria

High Level Design Options

Note, these are just strawman sketches.

Below are a few high level design options for how the buffer system will work internally. Its subsections are ordered roughly in increasing complexity.

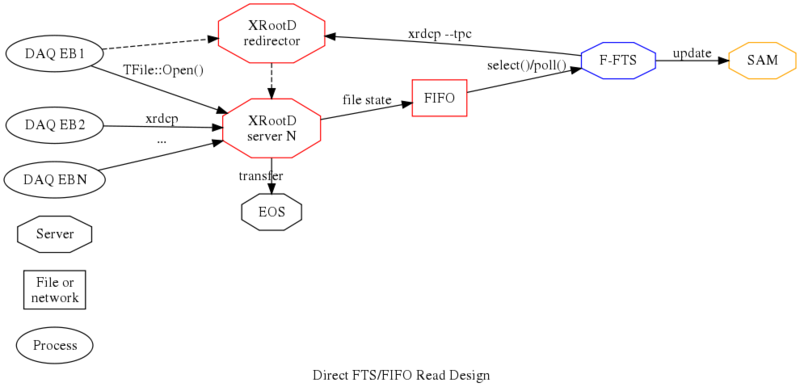

Direct FIFO Read

Basics:

- An F-FTS instance resides on each storage node.

- F-FTS is modified to directly read the XRootD notification FIFO.

- Each F-FTS responds to close events by initiating transfers to EOS.

- Transfer can be "xrdcp --tpc" to redirector for server-to-server XRootD to EOS or it can be a copy that F-FTS does explicitly from file-system to EOS.

Questions:

- How does the metadata file producer get run on the storage nodes under this scenario?

- How do synchronize data+metadata files?

- How do we recover/replay?

- What do we provide for monitoring?

Possible issues:

- The only record of actions on the buffer will be XRootD and F-FTS logs. Understanding lost files or other problems require "grep-ology".

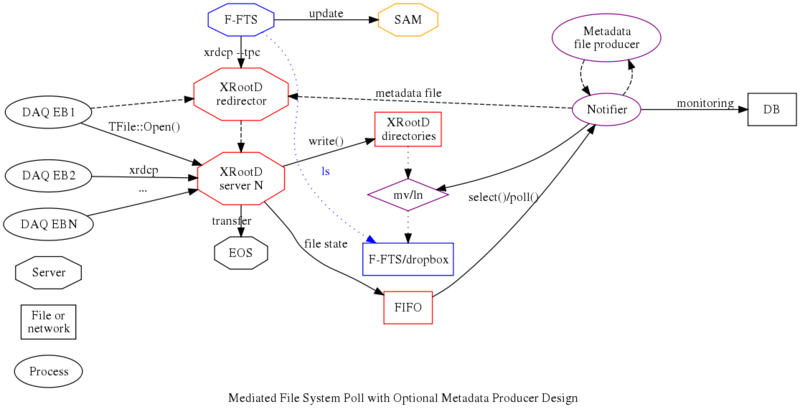

Mediated File System Poll with Metadata Production

- An F-FTS instance resides on each storage node.

- A "notifier" process is developed which runs on each storage node.

- It handles XRootD close of a DAQ data file.

- It runs the metadata file producer

- It creates the data+metadata files in the F-FTS dropbox (eg with atomic mv or ln)

- It can produce compact logs or even DB entries for its actions.

Questions:

- What about if the DAQ produces the metadata file? It will land on a random XRootD storage node. Depending on DAQ, it will precede or follow the data file. How will F-FTS learn of it and synchronize the two?

Possible issues:

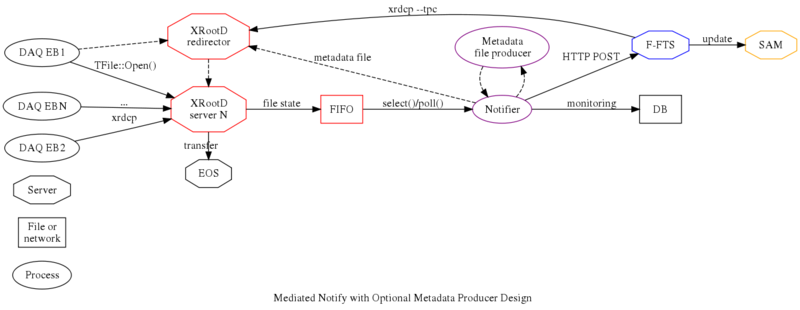

Mediated Notify with optional Metadata Production

- A single F-FTS instance is installed

- A "notifier" process is developed which runs on each storage node.

- It handles XRootD close of a DAQ data file.

- It (optionally) runs the metadata file producer

- It notifies F-FTS via HTTP POST

- It can produce compact logs or even DB entries for its actions.

Questions:

- As above, what if DAQ produces the metadata file? How to sync the pair?

Possible issues:

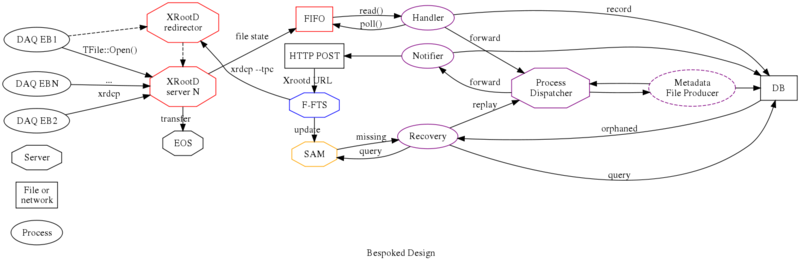

Complex Bespoked

This version provides a high degree of monitoring and control but at the expense of complexity and somewhat reinventing wheels already in FTS. The figure provides a cartoon of the actors involved in buffering the DAQ data files and associated metadata files.

Explanation:

- Multiple DAQ Event Builder (EB) nodes write to XRootD

- XRootD Redirector sends transfers to specific XRootD on specific storage node (not shown)

- XRootD Redirector writes message to FIFO on state changes

- Handler

poll()to get notified of FIFO ready to read - Handler records message in local DB and forwards to Process Dispatcher and returns to

poll()ASAP. Internally this may involve message dispatch to threaded workers over thread safe message queue. - Process Dispatcher actually handles the message by running a number of processes for each message. A map of message type to processes is part of its configuration.

- Notifier is responsible to tell FTS via HTTP POST about files being ready to transfer and recording this act in to the DB. The notification largely consists of the XRootD URL of the files to transfer. Note: this is not a persistent HTTP query; the response says nothing about the success of the transfer (check if this is true).

- If the buffer nodes are made responsible for producing the metadata file, we start this process. Note, this design currently does not take into account how to run this process on the storage node hosting the DAQ data file.

- The Recovery process is a command line program that can query SAM and the local DB to determine what files entered the buffer but never made it to EOS. It can also resend messages to the Process Dispatcher to replay the actions to attempt to reprocess the messages.

- The local DB is accessed by agents via SQLAlchemy using a shared code base for the ORM models. A central table holds info about each incoming XRootD message and associated tables hold results of processing the message by the various other agents, including replays.

Options:

The Process Dispatcher can be responsible for writing message to DB.

The various agents shown here may be implemented as separate processes or amalgamated to some extent. Eg, Process Dispatcher may absorb Handler and Notifier, but probably should keep Metadata File Producer and Recovery as external. The main point is that the each "bubble" in the drawing should act asynchronously from the others and not block. Eg, the handler should give the ProcessDispatcher notification and that should return immediately.