CERN Prototype

Contents

Important Note

Please see the main DUNE Wiki for most up-to-date materials. This page is no longer actively maintained and is kept here for archival and reference purposes, reflecting the early stages of protoDUNE development in 2015-16.

Overview

The protoDUNE experimental program is designed to test and validate the technologies and design that will be applied to the construction of the DUNE Far Detector at the Sanford Underground Research Facility (SURF). The protoDUNE detectors will be run in a dedicated beam line at the CERN SPS accelerator complex. The rate and volume of data produced by these detectors will be substantial and will require extensive system design and integration effort.

As of Fall 2015, "protoDUNE" is the official name for the two apparatuses to be used in CERN beam test: single-phase and dual-phase LArTPC detectors. Each received a formal CERN experiment designation:

- NP02 for the dual-phase detector. Yearly report

- NP04 for single-phase detector. Yearly report

The CERN Proposal and the TDR

- protoDUNE CERN proposal: DUNE DocDB 186

- as of summer 2016, the protoDUNE TDR is work in progress and is maintained on github. Contact Tom Junk, Brett Viren or Maxim Potekhin for more detail.

Beam, Experimental Hall and other Infrastructure

- LBNE DocDB 9989: Beam Group presentation 11/18/14.

- Integration status as of early July 2015

- EHN1 Extension Coordination - CERN Neutrino Platfrom Project (sharepoint pages at CERN)

- North Area Extension:installation schedule

Materials and Meetings

- Current series of meetings at CERN - "neutrino platform" and "detector integration"

- Facilities Integration

- protoDUNE measurements/analysis group meetings

- ARCHIVE: CERN Prototype Materials - collection of reference materials and history of this subject

Expected Data Volume and Rates

Important Note

At the time of writing (Summer 2016) this information is still under development and this page is yet to be edited to reflect the most recent methodology and estimates.

Overview

In order to provide the necessary precision for reconstruction of the ionization patterns in the LArTPC, both single-phase and dual-phase designs share the same fundamental characteristics:

- High spatial granularity of readout (e.g. the electrode pattern), and the resulting high channel count

- High digitization frequency (which is essential to ensure a precise position measurement along the drift direction)

Another common factor in both designs is the relatively slow drift velocity of electrons in Liquid Argon, which is of the order of millimeters per microsecond, depending on the drift volume voltage and other parameters. This leads to a substantial readout window (of the order of milliseconds) required to collect all of the ionization in the Liquid Argon volume due the event of interest. Even though the readout times are substantially different in the two designs, the net effect is similar. The high digitization frequency in every channel (as explained above) leads to a considerable amount of data per event. Each event is comparable in size to a high-resolution digital photograph.

There are a few data reduction (compression) techniques that may be applicable to protoDUNE raw data in order to reduce its size. Some of the algorithms are inherently lossy, such as the Zero Suppression algorithm which rejects parts of the digitized waveforms in LArTPC channels according to certain logic (e.g. when the signal is consistently below a predefined threshold for a period of time). There are also lossless compression techniques such as Huffman algorithm and others. At the time of writing it is assumed that only lossless algorithms will be applied to the compression of the protoDUNE raw data.

It is foreseen that the total amount of data to be produced by the protoDUNE detectors will be of the order of a few petabytes (including commissioning runs with cosmic rays). Both instantaneous and average data rates in the data transmission chain are expected to be substantial, e.g. in GB/s for the former and hundreds of MB/s fo the latter. For these reasons, capturing data streams generated by the protoDUNE DAQ systems, buffering of the data, performing fast QA analysis, and transporting the data to sites external to CERN for processing (e.g. FNAL, BNL, etc.) requires significant resources and adequate planning.

Taking data in non-ZS mode

As of Q2 of 2016, non-ZS mode is considered for implementation in protoDUNE (single phase)

- Plans for Raw Data Management (slides)

- protoDUNE/SP data scenarios (spreadsheet): DUNE DocDB 1086

Calibration

- Main Calibration Page

- Calibration Strategy: presentation by I.Kreslo at the protoDUNE Science Workshop in June 2016

Software and Computing

Overview

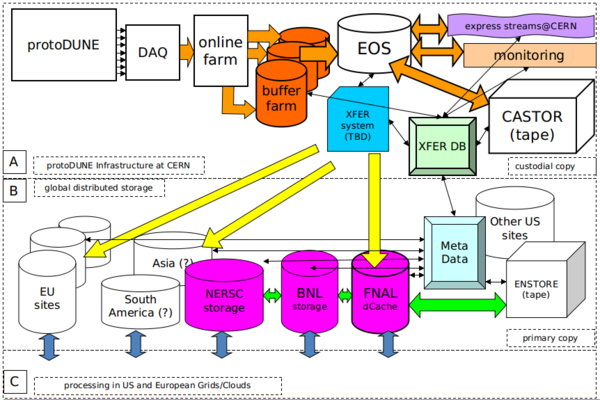

Due to the short time available for data taking, the data to be collected during the experiment is considered "precious" (impossible or hard to reproduce) and redundant storage must be provided for such data. One primary copy would be stored on tape at CERN, another at FNAL and auxiliary copies will beshared between US sites e.g. BNL and NERSC. The aim is to reuse existing software from other experiments to move databetween CERN and the US with appropriate degree of automation, error checking and correction, and monitoring.

There will be a prompt processing system with primary purpose of Data Quality Management.

DAQ and Online Monitoring

DAQ links:

- Giles' page hosted at Oxford (subject to change) - to-do list

- protoDUNE DAQ Topics: list of questions, issues and action items (ported from Giles' link above)

- General DAQ Info relevant to online/offline interface

Online monitoring is considered a component of DAQ. While it runs on a separate set of hardware, it receives its input data directly from DAQ and aims to have a minimal latency in delivering various monitoring data to the operators.

Raw Data Handling

Technical info is kept on the CERN Data Handling page. The rest of this section is mainly of historical interest (but still reflects the general design).

Conceptual diagram of the data flow is presented below (primarily for raw data). It largely follows the pattern found in the LHC experiments, i.e. contains an online buffer from which the data is transmitted to CERN EOS for further distribution and as an intermediate staging area for tape storage. Please note that protoDUNE Beam Instrumentation (BI) creates a separate stream of data and not included in this description. See the BI page for details.

Initial design ideas (as of early 2016) are documented in the paper created during a meeting with FNAL data experts in Mid-March 2016. The main idea is to leverage a few existing systems (F-FTS, SAM etc) in order to satisfy a number of requirements. A few of the initial requirements are documented below:

- Some use cases for managing the state of data

- Raw Data Management (slides)

- Basic Requirements for the protoDUNE Raw Data Mangement System: DUNE DocDB 1209

- Finally, the general design of the protoDUNE Data Mangement System can be accessed in DUNE DocDB 1212.

Online Buffer

Current Technology Choice

The online buffer is one of the central elements for the raw data management system in protoDUNE. The requirements and initial design ideas are presented in the links above (cf DocDB 1209 and 1212). The current design choice (as of Fall of 2016) is a high-performance combination of storage appliances and compute servers to be purchased from DELL.

Technologies previously considered

- The SAN Technology has the advantage of being available as an appliance, however having looked at the available info it presents its own set of challenges and may not be cost effective after all (see the SAN page for more info).

- XRootD is a storage clustering technology which does provide scalability in both CPU and storage capacity and throughput which is important for the online buffer proper functioning. There is a "XRootD at BNL" page containing miscellaneous bits of info on how to install and start using XRootD and relevant systems at BNL. The Basic XRootD page is an intro for beginners, which should be helpful to start experimenting with XRootD on your own machine(s).

- XRootD Buffer is a page detailing a few approaches to the design of the buffer based on XRootD and its interaction with F-FTS.

Online/Offline Interface

The online/offline interface is loosely defined as the middleware located between the Event Builders in the DAQ and the mass storage systems at CERN (such as EOS) and elsewhere (such as dCache at FNAL). As such, the interface has the following principal components:

- the online buffer

- F-FTS

- a notification mechanism which can be as simple as an atomic event sifnaling creation of a file which is to be "picked up" by the FTS.

DQM and Prompt Processing

Data Quality Monitoring (DQM) aims to fill the gap between the fast, high-bandwidth Online Monitoring with a limited and fixed CPU budget, and Offline Processing which has access to substantially more resources but is much less agile and is not a good tool to produce actionable data for the experiment operators on the time scale of a few minutes to an hour.

The protoDUNE DQM will utilize the protoDUNE prompt processing system (p3s). The proposed design of p3s is documented in DUNE DocDB 1861, and technical notes for p3s developers are hosted on a separate page: p3s.

DQM will include a variety of payloads documented at this link. Both Online Monitoring (OM) and DQM software are based on art/larsoft/root which provides flexibility in placing their respective payload jobs, i.e. there a high degree of portability of these jobs across OM and DQM is expected.

Metadata and File Catalog

TBD

Storage at CERN

More information (including fairly technical bits) can be found on the CERN Data Handling page.

- EOS is a high-performance distributed disk storage system based on XRootD. It is used by major LHC experiments as the main destination for writing raw data.

- CASTOR is the principal tape storage system at CERN. It does have a built-in disk layer, which was earlier utilized in production and other activities but this is no longer the case since this functionality is handled more efficiently by EOS. For that reason, the disk storage that exists in CASTOR serves as a buffer for I/O and system functions.

Appendix

Data Characteristics as per the 2015 proposal

This information is presented here mainly for historical reference and to reflect the content of the CERN Proposal.

Estimates were developed under the assumption of Zero Suppression for the single-phase detector. In this case, both data rate and volume are determined primarily by the number of tracks due to cosmic ray muons, recorded within the readout window, which is commensurate with the electron collection time in the TPC (~2ms).

For a quick summary of the data rates, data volume and related requirements see:

A few numbers for the single-phase detector which were used in the proposal:

- Planned trigger rate: 200Hz

- Instantaneous data rate in DAQ: 1GB/s

- Sustained average: 200MB/s

Based on this, the nominal network bandwidth required to link the DAQ to CERN storage elements is ~2GB/s. This is based on the essential assumption that zero suppression will be used in all measurements. There are considerations for taking some portion of the data in non-zs mode, which would require approximately 20GB/s connectivity. Since WA105 specified this as their requirement, DUNE-PT may be able to obtain a link in this range.

The measurement program is still being updated, the total volume of data to be taken will be ~O(1PB). Brief notes on the statistics can be found in Appendix II of the "Materials" page.

Data Transfer: Examples and Reference Materials

- StashCache

- Archiving Scientific Data Outside of the Traditional HEP Domain, Using the Archive Facilities at Fermilab

- CMS FTS user tools

- The NOvA Data Acquisition System

- The DIRAC Data Management System and the Gaudi dataset federation

- STORAGE MANAGEMENT AND ACCESS IN WLHC COMPUTING GRID (a thesis by Flavia Donno)

- Towards an HTTP Ecosystem for HEP Data Access