|

|

| (11 intermediate revisions by the same user not shown) |

| Line 1: |

Line 1: |

| − | = Documentation = | + | =This Page= |

| − | ==dCache/xrootd==

| + | As of 2016 this page is work in progress. As the development of online systems for protoDUNE continues, |

| − | * A comprehensive [[Media:Dcache_xrootd_Litvintsev_June2014.pdf|review of dCache/xrootd]]. This document is quite relevant as it explains how dCache storage at FNAL is equipped with a "xroot door" so that it's exposed to external xrootd servers.

| + | more content will be added. See [[XRootD_Buffer | protoDUNE online buffer]] pages for more info. |

| − | * https://github.com/DUNE/protodune-raw-data-mgt: this repo contains information on the design of the protoDUNE online buffer, and documentation on application of xrootd for that purpose.

| |

| − | | |

| − | ==A pedestrian view on running a xrootd service==

| |

| − | ===Disclaimer===

| |

| − | The information below is not a reliable piece of documentation meaning it was obtained by experimentation with a small xrootd built from scratch in trying to overcome a modicum of obscurity that is present in the official xrootd docs. There are probably more scientific and proper ways to do things.

| |

| − | ===Starting a simple instance of xrootd service===

| |

| − | There is more than way to start the xrootd service (see documentation).

| |

| − | The most primitive way is to start the requisite daemon processes from the command line. A few details are given below.

| |

| − | | |

| − | Starting the xrootd daemon by itself is enough to serve data from a single node.

| |

| − | <pre>

| |

| − | xrootd -c configFile.cfg /path/to/data &

| |

| − | </pre>

| |

| − | | |

| − | In this case configFile.cfg contains the necessary configuration. Without it present,

| |

| − | some simple defaults will be assumed but one cannot do anything remotely meaningful.

| |

| − | | |

| − | The "-b" option will start the process in the background by default, and the "-l" option can be used

| |

| − | to specify the path to the log file (otherwise stderr will be assumed).

| |

| − | | |

| − | | |

| − | If the "path to data" is not explicitely defined, xrootd will default to /tmp which might work

| |

| − | for initial testing but isn't practical otherwise. Whether xrootd is running as expected can be

| |

| − | tested by using the xrdcp client from any machine from which the server is accessible, e.g.

| |

| − | <pre>

| |

| − | xrdcp myFile.txt root://serverIP//path/to/data

| |

| − | </pre>

| |

| − | | |

| − | ===Clustering===

| |

| − | In a clustered environment, you also need to start the cluster manager daemon, e.g.

| |

| − | <pre>

| |

| − | xrootd -c configFile.cfg /path/to/data &

| |

| − | cmsd -c configFile.cfg /path/to/data &

| |

| − | </pre>

| |

| − | | |

| − | The data in the cluster is exposed through the manager node, whose address is to be used in queries.

| |

| − | Example:

| |

| − | <pre>

| |

| − | xrdcp -f xroot://managerIP//my/path/foo local_foo

| |

| − | </pre>

| |

| − | | |

| − | The file "foo" will be located and if it exists, will be copied to "local_foo" on the machine running the xrdcp client.

| |

| − | Caveat: if multiple files exist in the system under the same path, the result (i.e. which one gets fetched) is random.

| |

| − | | |

| − | ===Configuration File===

| |

| − | An example of a working configuration file suitable for a server node (not for the manager node):

| |

| − | <pre>

| |

| − | all.role server

| |

| − | all.export /path/to/data

| |

| − | all.manager 192.168.0.191:3121

| |

| − | xrd.port 1094

| |

| − | acc.authdb /path/to/data/auth_file

| |

| − | </pre>

| |

| − | In the example above the IP address for the manager needs to be set correctly, it's arbitrary in this sample.

| |

| − | | |

| − | ===authdb===

| |

| − | The "authdb" bit is important, things mostly won't work without proper authorization (quite primitive in this case as it relies on a file with permissions).

| |

| − | If all users are given access to all data, the content of the file can be as simple as

| |

| − | <pre>

| |

| − | u * /path/to/data lr

| |

| − | </pre>

| |

| − | | |

| − | ===Redirector===

| |

| − | The redirector coordinates the function of the cluster. For example, it finds the data based on the path

| |

| − | given by the clients such as xrdcp, without the client having to know which nodes contains this bit of data.

| |

| − | A crude (but working) example of the redirector configuration:

| |

| − | <pre>

| |

| − | all.manager managerIP:3121

| |

| − | all.role manager

| |

| − | xrd.port 3121

| |

| − | all.export /path/to/data

| |

| − | acc.authdb /path/to/data/auth_file

| |

| − | </pre>

| |

| − | | |

| − | Note the port number. This is not the data port but the service port to used for communication inside the cluster

| |

| − | (e.g. for metadata).

| |

| − | | |

| − | Of course the redirector itseld can also carry data, so configuration of the server might look like this:

| |

| − | <pre>

| |

| − | all.manager managerIP:3121

| |

| − | all.role manager

| |

| − | xrd.port 1094

| |

| − | all.export /path/to/data

| |

| − | acc.authdb /path/to/data/auth_file

| |

| − | </pre>

| |

| − | | |

| − | A crude way to initiate a node in this role might look like this

| |

| − | <pre>

| |

| − | xrootd -c server.cfg /path/to/data &

| |

| − | cmsd -c redir.cfg /path/to/data &

| |

| − | </pre>

| |

| − | | |

| − | ===xrdfs===

| |

| − | ====File Info====

| |

| − | Filesystem functionality. Example:

| |

| − | <pre>

| |

| − | xrdfs managerIP ls -l /my/path

| |

| − | xrdfs managerIP ls -u /my/path

| |

| − | </pre>

| |

| − | In the above the first item performs similarly to "ls -l" in Linux shell, the second prints URLs of the files.

| |

| − | | |

| − | The following command locates the path, i.e. returns the address(es) of the server(s) which physically hold(s) the path - can be multiple machines:

| |

| − | <pre>

| |

| − | xrdfs managerIP locate /my/path

| |

| − | </pre>

| |

| − | | |

| − | Adding the "-r" option will force the server to refresh, i.e. to do a fresh query. Otherwise, a cached result will

| |

| − | be used if it exists.

| |

| − | | |

| − | The "stat" command provides info similar to "stat":

| |

| − | <pre>

| |

| − | xrdfs managerIP stat /my/path

| |

| − | </pre>

| |

| − | | |

| − | The "rm" command does what the name suggest, with the usual caveat that if same path is present on a few machines, the result will be arbitrary - one of the files will be deleted at a time.

| |

| − | | |

| − | ====Host Info====

| |

| − | <pre>

| |

| − | xrdfs hostIP query config role

| |

| − | </pre>

| |

| − | | |

| − | ====Checksum====

| |

| − | XRootD hosts can report checksums for files, with a few checksum algorithms available.

| |

| − | To enable this on a host a special line needs to be added to the configuration file, for example:

| |

| − | <pre>

| |

| − | xrootd.chksum md5

| |

| − | </pre>

| |

| − | As usual, it is only necessary to query the redirector in order to get this info by the xrdfs client:

| |

| − | <pre>

| |

| − | xrdfs managerIP query checksum /my/path/to/file

| |

| − | </pre>

| |

| | | | |

| | = xrootd@BNL = | | = xrootd@BNL = |

| − |

| |

| | Currently there is a small DUNE Cluster (for historical reason named "lbne cluster") at Brookhaven National Lab under | | Currently there is a small DUNE Cluster (for historical reason named "lbne cluster") at Brookhaven National Lab under |

| | the umbrella of RACF ''RHIC and ATLAS Computing Facility''. The machines have names like lbne0001 etc. | | the umbrella of RACF ''RHIC and ATLAS Computing Facility''. The machines have names like lbne0001 etc. |

| Line 159: |

Line 27: |

| | | | |

| | = Misc = | | = Misc = |

| | + | ==dCache/xrootd== |

| | + | * There is a comprehensive [[Media:Dcache_xrootd_Litvintsev_June2014.pdf|review of dCache/xrootd]]. This document is quite relevant as it explains how dCache storage at FNAL is equipped with a "xroot door" so that the data content of dCache is exposed to external clients. |

| | + | |

| | + | ==Advice to beginners on running a XRootD service== |

| | + | See the [[Basic XRootD]] page which contains a few helpful tips on how to install and start running a basic XRootD cluster for initial experimentation. |

| | + | |

| | ==Global Paths== | | ==Global Paths== |

| | For Xrootd we can have global Xrootd paths like: | | For Xrootd we can have global Xrootd paths like: |

| Line 168: |

Line 42: |

| | | | |

| | Since the two are on different ports this should be okay. | | Since the two are on different ports this should be okay. |

| − | ==Running a minicluster==

| |

| − | For basic "basement lab" type of experimentation it's convenient to use

| |

| − | a few computers not needed at the moment for other purposes. One should really

| |

| − | use ssh to manipulate a few machines from a single host, but if security

| |

| − | is not an issue due to the network being strictly local, and the inet daemon

| |

| − | is installed, telnet can be used as a quick solution. For example, on Ubuntu

| |

| − | a daemon can be started as follows:

| |

| − | <pre>

| |

| − | sudo /etc/init.d/xinetd start

| |

| − | </pre>

| |

| | | | |

| | | | |

This Page

As of 2016 this page is work in progress. As the development of online systems for protoDUNE continues,

more content will be added. See protoDUNE online buffer pages for more info.

xrootd@BNL

Currently there is a small DUNE Cluster (for historical reason named "lbne cluster") at Brookhaven National Lab under

the umbrella of RACF RHIC and ATLAS Computing Facility. The machines have names like lbne0001 etc.

Xrootd software is deployed on all of these. To utilize it, the user needs to be authenticated with a X.509

certificate by the xrootd service and authorized to access it by system administrators (please contact

Brett Viren or Maxim Potekhin for further information.

Once authorized on the site, the user will need the use the following commands to obtain the Grid proxy:

setenv GLOBUS_LOCATION /afs/rhic.bnl.gov/@sys/opt/vdt/globus

source $GLOBUS_LOCATION/etc/globus-user-env.csh

grid-proxy-init

...and enter the passphrase as required. This will make sure the user can be authenticated to the xrootd service is allowed to use it.

The following is an example of a shell command that will transport a single file from FNAL to BNL:

xrdcp root://lbnelrd.rcf.bnl.gov//lbne/mc/lbne/simulated/001/singleparticle_antimu_20140801_Simulation1.root \

/tmp/singleparticle_antimu_20140801_Simulation1.root

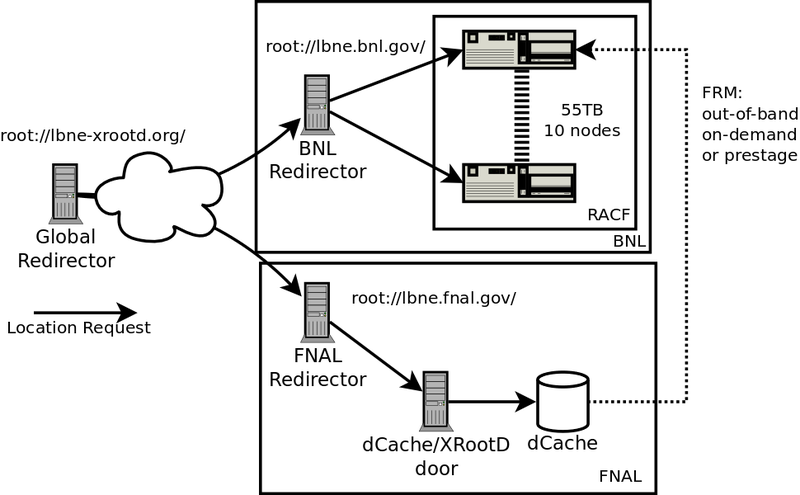

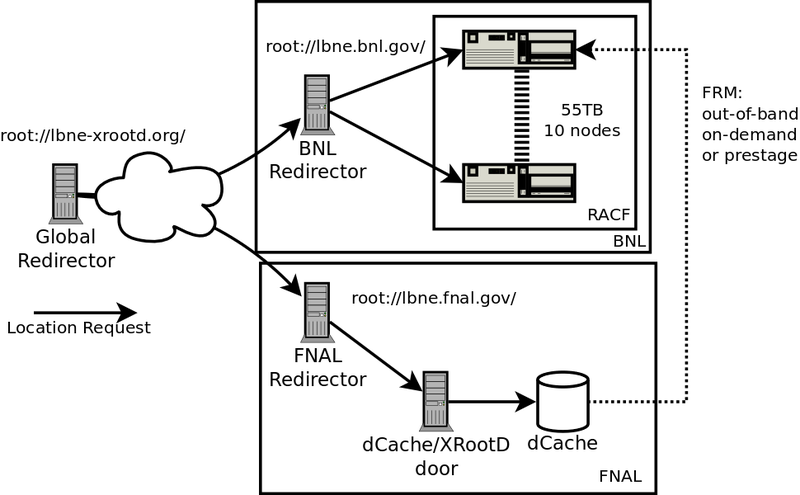

Possible xrootd architecture for medium term

The idea behind the architecture proposed here is to achieve federation of storage and access to data across a few data centers (e.g. national labs) with modest amount of effort and resources. In this approach, this is effectively achieved by using a "global redirector" which allows xrootd services to locate a particular piece of data within the federation.

Misc

dCache/xrootd

- There is a comprehensive review of dCache/xrootd. This document is quite relevant as it explains how dCache storage at FNAL is equipped with a "xroot door" so that the data content of dCache is exposed to external clients.

Advice to beginners on running a XRootD service

See the Basic XRootD page which contains a few helpful tips on how to install and start running a basic XRootD cluster for initial experimentation.

Global Paths

For Xrootd we can have global Xrootd paths like:

root://data.<tbd>.org/path/to/file.root

But, in the future we may want to serve data files on other protocols

but in the same domain/namespace. Ie:

http://data.<tbd>.org/path/to/file.root

Since the two are on different ports this should be okay.

Back to Main Page (DUNE)

Back to DUNE Computing